TL;DR at the bottom

On 23rd January, The Entrepreneurship & Innovation Cell (EIC) of our college conducted their annual ESummit. They had a few events out of which one was The Startup Sprint: a 30 hour long hackathon meant for students who wanted to start a company. It focused ~60% on the Business Part and ~40% on the Technology Part. The hackathon began on Thursday afternoon at 2pm and was to end on Friday evening at 8pm. I initially had no intention of participating because I wanted to chill and I was done with hackathons for a while. My friend (Friend A), the president of the EIC and organizer of the ESummit, asked me to take care of the media coverage (photos and videos) for the hackathon and other events of the ESummit. He had a montage sort of vision and wanted me to take photos and videos with this in mind.

I go to the hackathon and start taking a few videos, shortly after which I sit down because I had enough. I don’t know what happened but at around ~4 PM, my interest was piqued and my interest to participate in the hackathon increased slightly. It was around 8 PM that I decided to actually participate in the hackathon with an idea in mind: an Automatic Video Editor. Why? I didn’t feel like editing the raw footage that the media team would take for the montage. It was too much effort. Instead, I decided to make a program that would edit the video for me. A very far-fetched thing, yes, but hey 🤷, it was an interesting idea at least for a blog post in the event that it didn’t work out.

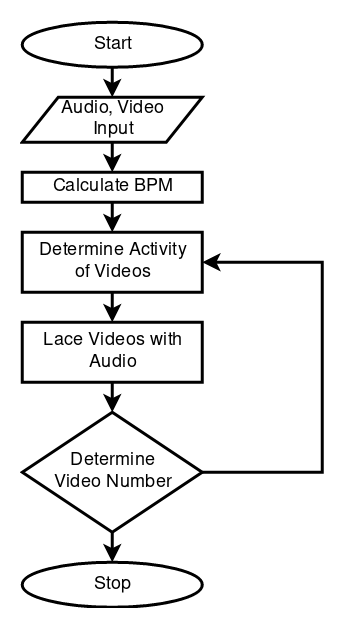

I started planning at around 8:30 PM and came up with this basic flowchart (it’s nothing fancy):

Flowchart boi

The plan was to create an Automatic Video Editor that would be personalized for me. Between events and different editing files, my program was to understand how I generally edit my videos and “learn” my style so that the next time I upload my raw footage and audio, it would do a primitive edit and let me figure out the rest. The main users were to be people who wanted to make videos, hobby editors, people who wanted to learn how to edit videos and YouTube Vloggers (or Vloggers in general). When a Vlogger becomes big, they usually decide to shell out more content and have less time for editing and hence hire full time editors. My program would simplify their editing so that they wouldn’t have to spend too much time on editing. Seemed pretty solid.

I started working on the audio part first to figure out how to do beat sync. I scoured the internet for python libraries and eventually found aubio which could analyze audio files for these four properties: Onset, Pitch, Tempo and Notes. I worked until 4 AM on utilizing these four classes to get what I wanted before I broke to get some food. I returned at 4:30 AM and worked till 6 AM which is when I finished working on the audio part. I decided to go back and sleep for an hour. I woke up 4 hours later 🤦♂️ realizing I had 30 minutes to get to class (it was still a working Friday). I attended Computer Networks and went to the hackathon after class. At this time, I asked my friend (Friend M) if he was interested to help out with my idea. He seemed enthusiastic and was interested to help out. Yay! An extra hand.

We started working at 1 PM. The atmosphere: people coming in the room and leaving the room every few minutes. But that didn’t bother us. We sat all the way at the back with our headphones on and started working after we split the work. He started working on how to piece together images into a video and eventually made a nice little class that accepted image paths and durations that would get turned into a video using OpenCV. I was working on creating a master program that controlled the process.

At around 3 PM we had an initial judging (non-evaluated). The judges were two of our professors and they were quite happy with our progress. We showed them plots of the pitch and the audio analysis part of the program and they saw that the program was working audio wise. Nothing too harsh from their side. After the intial judging, we decided to work on images and not videos as the computation time for videos was way too high and time was running short. We had T minus 4 hours 30 minutes. We now had to decide what feature we needed to implement for the transitions of images. We thought about it and I suggested most prominent colors using KMeans which seemed the simplest to implement. Friend M decided he’d implement this while I attempted to make the association learning for the “learning” part.

At around 7 PM, we got news that one of the judges wouldn’t be able to make it for the final evaluation and that we had two options. Submit the code and present it tomorrow at 9 AM or work till 9 AM and present it tomorrow 9 AM. It was unanimously decided by all teams to work through the night till the next morning. We were quite relieved because we had a bit more to go through. We got back to work and I started working on the master program trying to figure out the association while Friend M continued working on the images and the similarity of the colors of the images (we tried to use CIEDE2000 but ultimately settled on Euclidean distance as we were just trying to show a proof-of-concept). As the night began to draw on, some of my friends who were also participating started to feel the effects of sleep deprivation. To say that they went crazy is an understatement. Unfazed by them and their idiocy, both of us kept working.

Around 3 AM on Saturday, I decided to focus completely on the master program. Integrating the audio and video part was a challenge and to make a metadata file that preserved the attributes that we required proved to be the most challenging. The beat sync part is very hodge-podgy and isn’t well programmed (it doesn’t work 100% well and will have flaws for some select audio) and it is for this reason that I’m choosing not to upload our class to GitHub. Instead, a fixed beat-time will have to be provided by whoever wishes to use the program. Friend M took over the association part and finished the rest of it by 6 AM. All that was left was for me to finish the master program. I finished it at around 7:30 AM and the judges were supposed to come at 8 AM to start announcing what they wanted to announce. (Note: They didn’t show up at 8 AM, but instead at 10:30 AM (we thought we only had a half hour left, so the tension and pressure was high)).

With less than an hour (or so we thought) we checked that everything was working. The association, sequencing of images and audio beat sync primarily worked and all that was left was to make the video from whatever our program calculated. Our program generates a video from five images from a folder of images and a path to an audio file. When we tested it out the first time, there was a massive issue: There was only one image in the video. We freaked out. There was less than a half hour now and our only way of proving what we did was right was not working. I … sort of went looney at this point and offered to ffmpeg the images into a video. Friend M on the other hand tried to figure out why the OpenCV code he wrote didn’t work. After a very intense 10 minutes of me searching for an alternative and Friend M trying to figure out why his code didn’t work, Friend M found that resizing all images to a common size worked. We think that all the images must be of the same size for it to work, but we’re not sure though. What we do know, much to our relief, is that it did work and our video was being stitched together.

The last thing was to use ffmpeg to fuse the audio and video together. Once that was done, our program worked. Beautifully. The beat synced worked relatively well but we couldn’t judge whether the video association was working properly. We had far too less data and time to experiment on it. However, we believed in the mathematics that our program employed and assumed that the program was indeed associating images with previous preferences. Once the judges arrived at 10:30, we decided to go 7th and in the mean time I took a 5 minute nap that felt like an hour. I was, after all, awake for a really long time. Once our turn was up, we presented pretty damn well. I would give us a 9.5 / 10. There was a slight hiccup, but that was very quickly resolved. All in all, I was happy with our work and our output. We were even able to do a live demo where we fed images of the hackathon that we had been taking through the night.

We got done at 12 PM. It had been 46 hours since the hackathon had started. I worked for a majority of it and was feeling super drowsy and my head began to throb slightly. I went back to eat lunch, but instead ate the cake they were serving rather than actual food. Once I returned to my room, I lied down on my bed to close my eyes for a bit. It was 12:30 PM. Next time I woke up, I checked my phone at it was 4:35 PM. The prize ceremony was to start at 4:30 PM. I rushed all the way over there in my hoodie and shorts and bolted through the door. Luckily I wasn’t late. They started a few minutes after I entered.

Turns out we’d won second place. Ah man, was I so glad. A rush of relief and happiness flood through my body. All that effort did not go to waste. We won Rs. 15,000 (USD 210 at the time of writing this). Money. For myself. FINALLY!!!!!!!!! We split it 50-50 so I get around $105. Omg, all the electronics I can get with a hundred dollars. Squeals from excitement. I called my dad, mom and grandma to tell them. They were stoked. Anyway, I don’t know what I’m going to do with this money, but I sure am glad to have won it. It was a great 48 hours.

TL;DR: A friend and I won second place and ~$210 in a 48-ish hour start-up sprint hackathon conducted by The Entrepreneurship and Innovation Cell of our college by making an Automatic Video Editor that personalizes the primary edits of a sequence of images according to your previous history and style. It takes note of every time you edit something new and learns more about your preferences using a bit of association learning.

GitHub Link: Automatic-Video-Editor (Yeah, yeah. Very original. I know)

Note: If the above link doesn’t work, that means that I’ve still kept the repository on private access only. I plan to make it public within a day or two after updating the README. It will also mostly be archived, i.e. we both do not plan on working on this.

Note 2: As mentioned in the blog, the beat sync part will not be uploaded. Instead there will be a comment where the user has to input the durations of the images according to their own preference. I know this doesn’t make it very automatic anymore, but the current beat sync doesn’t work for all songs and it’s difficult to get it working (there are a lot of manual changes I have to do in the class). For this reason, I plan not to upload the beat sync code. However, the audio analysis code will be pushed to GitHub and anyone is free to use it’s results and attempt to re-create the beat sync part.